In the previous part, we saw how the float structure is implemented; the size of its memory footprint; and how bytes are cached when accessed by the CPU.

In this part, we will see the implementation of list; the complexity of some of its operations; and its memory requirements.

PyListObject: Implementation of List

As list in Python can hold objects of different types and there is no need to specify the types in advance (like it is in a statically typed language, like C/C++/...), you probably think there is some overhead for this convenience.

And there is overhead in both memory requirements and the time demands of some common list operations (which we will see later).

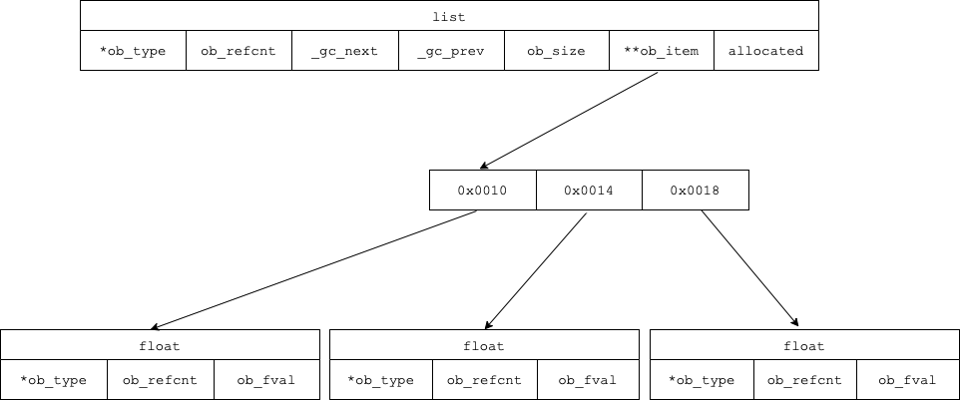

A list stores a pointer to an array of pointers to the actual elements. There is a good reason for both “levels” of pointers.

To fit objects of various previously-unknown types, the list cannot store the objects directly in its memory space, but it must point to them from the array of pointers (to a type PyObject). The reason why it cannot store them directly is that they can potentially have different sizes that may not be declared beforehand. And as we previously established that pointers to all Python types can be cast to a pointer to PyObject, that means any set of objects can be stored in a list.

For example, let lst1 be a list filled with items. Then we change an item (assign a different object to its index). If the items were stored directly in the array, the new object might not fit the desired position.

The pointer to the array itself is just how arrays are used in C (int *arr is the same as int arr[1]).

On top of the array of pointers, it holds PyVarObject (a base class for variable length containers in Python) that contrary to a PyObject also contains a counter for the number of elements it stores. Because it is a variable length array, it over-allocates (to be explained later), so it holds a variable for the number of bytes allocated. And because a list can “contain” (hold a reference) to itself, it must have those two pointers for garbage collection.

The structure then looks like this:

typedef struct { PyObject_VAR_HEAD PyObject **ob_item; Py_ssize_t allocated; } PyListObject;

where PyObject_VAR_HEAD is a macro for creating a PyVarObject.

Let us check the size of a list:

import sys a = [] print(sys.getsizeof(a)) # result: 56

Be aware that this is architecture-specific, version-specific, and most-likely OS specific, and the size (or its calculation) might differ slightly. I have tested it on a x86 macOS.

The memory overhead of a list is not an issue if there are many elements in the list. But if, for example, we want to imitate a multidimensional array using list of lists of . . . list, then the overhead size of a list can become significant.

In the previous part of this article, we talked about caches and a little bit about algorithms that (in)efficiently utilize them. This relates to the Python list object. When we scan a list in the context of caches, it behaves similarly to a linked list: Each read element causes a cache miss and a new block of bytes must be loaded from memory. That makes the whole process slow by design, which is especially true considering that the loaded elements are never primitive types, but are objects often several times larger than the actual value they hold (e.g., float or int).

Appending to a List

First, a list needs to be created. If the number of elements to be stored is not known in advance, one usually creates an empty list and then appends objects to it. However, if the number of items is known, then it would be faster (and more memory efficient) to create a list with the exact size required.

Readers familiar with “flexible” arrays should feel free to skip the next few paragraphs.

Let us imagine we want to have an array/list, but we don’t know the number of items that will be added to it at runtime. If the allocated space of the array/list exactly accommodates the number of items currently in it, it would have to be reallocated with every added item (resulting in O(n) complexity per append, where n is the current number of items).

To overcome the reallocation preceding every append, we always allocate a little more space than necessary. If the allocated space is large enough (a multiple of the number of items), the amortized time complexity of append can be brought down to O(1). Amortized, in this context, basically denotes the average time complexity of an append in a series of appends. The worst-case complexity can be more severe than the amortized complexity (which happens when reallocation is performed).

Similar claims also hold true for removal operations and array/list shrinking. Note: While the array only grows, amortized complexity is the same as average complexity, but once removal of elements and “shrinking” of the array is allowed, there is a difference, but I won’t go into that.

See Mareš’s book for an exaplanation of amortized analysis.

What is interesting in CPython is how the list actually grows.

Python increases the space for the list by approximately a multiple of 1.125. The newly allocated memory after reallocation:

new_allocated = ((size_t)newsize + (newsize >> 3) + 6) & ~(size_t)3;

Where newsize is the number of items in the list (after appending the last item). It basically says the new allocated memory is newsize * 9/8 + 6 but with the last two bits set to 0 (making the final number divisible by 4).

Note: The factor is a little different for very small lists.

Why is the factor so small and computed this way? I would love to know. In C++, the growth factor of std::vector in GCC is 2, and in Microsoft compiler it is reportedly 1.5. I suspect that since objects in Python are relatively large, the interpreter does not want to waste space.

Anyway, it’s clearly better to allocate the desired space beforehand rather than performing a series of appends.

Let’s see some examples of operations on a list.

Examples

First, let us compare two methods for list initialization. The slow option:

foo = []

for _ in range(1_000_000): # finishes in 82.1 ms

foo.append(1.0)A faster option:

# finishes in 53.3 ms foo = [1.0 for _ in range(1_000_000)]

There is a much faster option, but not a very practical one since it can only be used to repeat a single element:

foo = [1.0] * 1_000_000 # finishes in 2.81 ms

Note: Beware this construction works differently for mutable and immutable types.

Now let’s look at the size of one element in a list vs in an array.array and the time needed to scan it.

import random, array, sys

foo_list = [random.random() for _ in range(1_000_000)]

# using 'd' == double

foo_array = array.array('d', (random.random() \

for _ in range(1_000_000)))

# need to take size of every element,

# as they are not stored in the `list`

# but referenced

print((sys.getsizeof(foo_list) \

+ sum([sys.getsizeof(i) for i in foo_list]) \

) / len(foo_list))

# returns: 32.448728

print(sys.getsizeof(foo_array) / len(foo_array))

# returns: 8.1838Note that we don’t call sys.getsizeof on every element of the array. It’s because they are already counted in the overall size of the array as they are stored directly in the structure. Indeed, if we reduce the precision of the decimal number (from 64b to 32b, 'd' to 'f') we get half the size:

foo_array_f = array.array('f', (random.random() \

for _ in range(1_000_000)))

print(sys.getsizeof(foo_array_f) / len(foo_array_f))

# returns: 4.000064As we can see, items are much more efficiently stored in an array than they are in a list.

Now, let’s compare the scanning speed of list vs array.array:

sum_list = 0.0

sum_array = 0.0

for i in foo_list: # finishes in 35 ms

sum_list += i

for i in foo_array: # finished in 43.7 ms

sum_array += iWhy is array.array slower? Even though the elements of an array are efficiently stored in memory, when reading them an object is created for each (I am simplifying a little bit, as there are some optimizations), which makes the whole scan slower than a scan of a list that only returns references to the float objects.

Note that this can be sped up by using the sum() function instead of the loop (to 5.74 ms for list and 14.3 ms for array. And just as an interesting side note, in numpy, using the numpy.sum() function, the sum takes 0.5 ms.

Conclusion

We saw that using a list for storing or performing operations on float values is quite inefficient. A list of floats is represented in memory as an array of pointers to the PyFloat structure. So for a list of n floats, the consumed memory is approximately 4*n*8 bytes (omitting all additive constants and possible overallocation).

Paying attention to how a list is created can also be beneficial. Note that the described “inefficiencies” of list and float are not the only ones. There are many more, some of them stemming from Python being an interpreted language. That means some can be solved by third-party libraries.

On the other hand, as Donald Knuth once said (or at least it’s attributed to him): premature optimization is the root of all evil. So unless you repeatedly create large lists, you probably shouldn’t make the above “list creation” optimizations. However, working with real numbers is quite common in Python in the context of data analysis or machine learning. In such cases it makes sense to use more efficient implementations, such as the one offered by numpy.

Sources

- https://github.com/python/cpython/blob/main/Include/cpython/listob h

- https://github.com/python/cpython/blob/Include/internal/pycore_gc.h

- https://docs.python.org/3/c-api/structures.html

- http://mj.ucw.cz/vyuka/dsnotes/ - Data Structures lecture notes

- https://github.com/gcc-mirror/gcc/blob/libstdc%2B%2B-v3/include/b its/vector.tcc - growth factor of std::vector in gcc

- https://github.com/gcc-mirror/gcc/blob/libstdc%2B%2B-v3/include/b its/stl_vector.h