At Heureka, our front-end projects are rigorously tested with both unit and end-to-end (E2E) tests. We rely on Cypress, a robust tool with strong community support and a proven track record, to meet our E2E testing needs. However, as our test suites grew, execution times became a significant bottleneck. To accelerate development, we explored Playwright, a promising alternative offering the ability to interact with browsers' rendering engines directly and built-in test parallelization.

Brief technology comparison

Cypress is the most popular technology for E2E testing. It is focused on JavaScript applications and provides a simple set-up. Cypress is using the jQuery library to directly access the Document Object Model. Moreover, it incorporates a very useful graphical interface tool for easy visualisation of the scenarios and debugging as well.

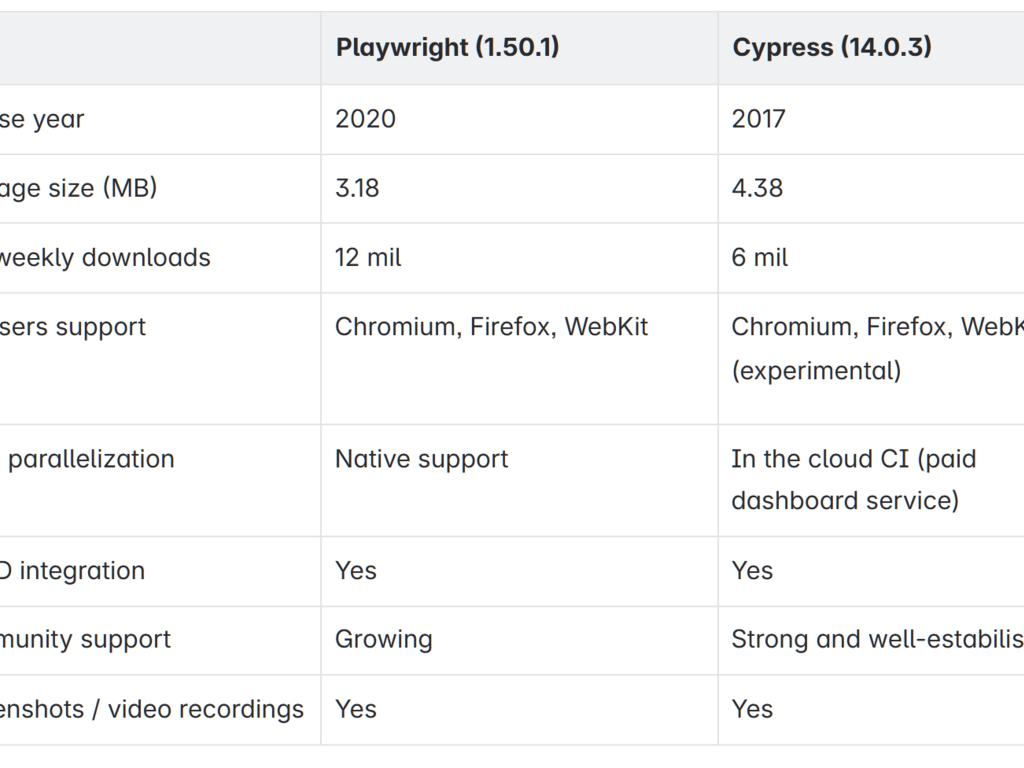

Despite Cypress having a longer history, Playwright’s popularity has become almost twice as high from the point of view of weekly downloads. It also differs in architecture, speaking about browser interaction by using WebSockets, native test parallelization and cross-browser support.

The table below shows a basic comparison of Playwright and Cypress.

There are lots of articles comparing those two technologies, moreover, you can use some AI tool to get a nice summary :-). In this paper, we focused on the quantification of those frameworks to decide what is more suitable for our front-end projects.

Measurements

All measurements began with three identical test suites, each executed once within our project. We implemented these tests using both Cypress and Playwright, ensuring identical scenarios. Chrome was used in headless mode for all browser interactions. Playwright's configuration, as defined in its configuration file, is as follows:

{name: 'Google Chrome',use: {…devices['Desktop Chrome'], channel: 'chrome'},}

1. Time comparison

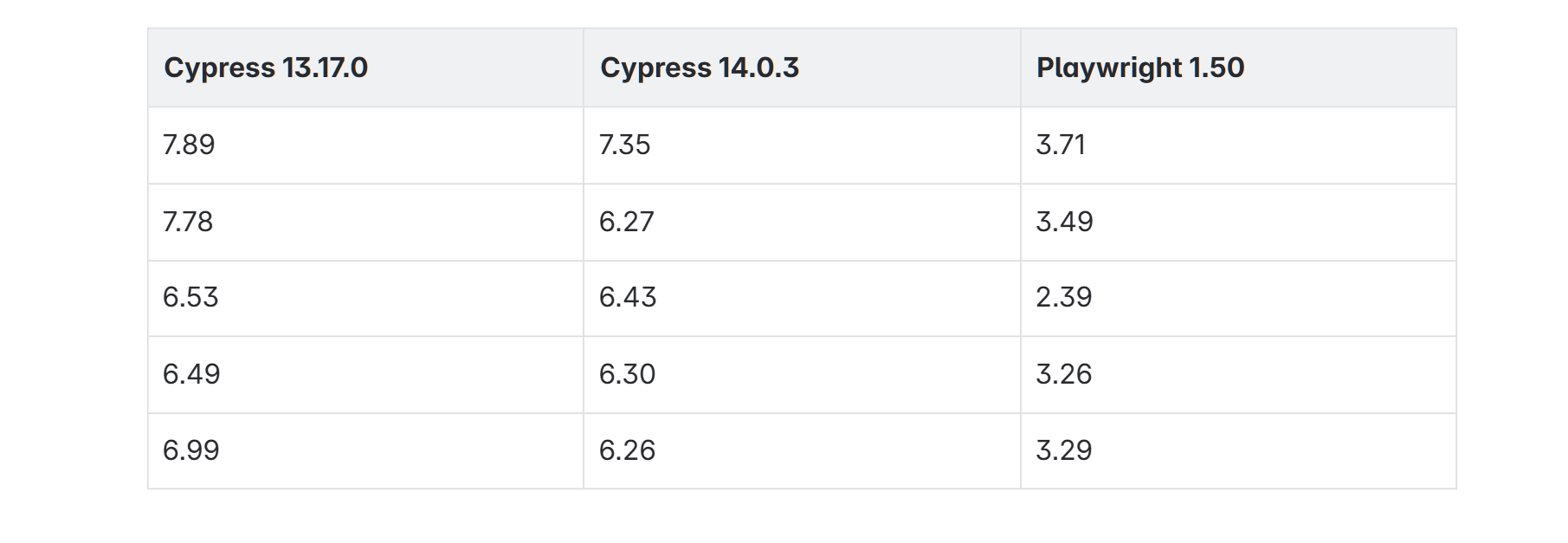

The initial measurement focused on a time comparison between Cypress 13, Cypress 14, and Playwright. Tests were executed five times for every framework using the following commands:

yarn cypress run – browser chrome and yarn playwright test

Test results indicate that Playwright executes single test scenarios approximately twice as fast as Cypress. Furthermore, Cypress 14 demonstrates an average performance improvement of 0.6 seconds compared to Cypress 13.

All following comparisons are conducted using Cypress 14.

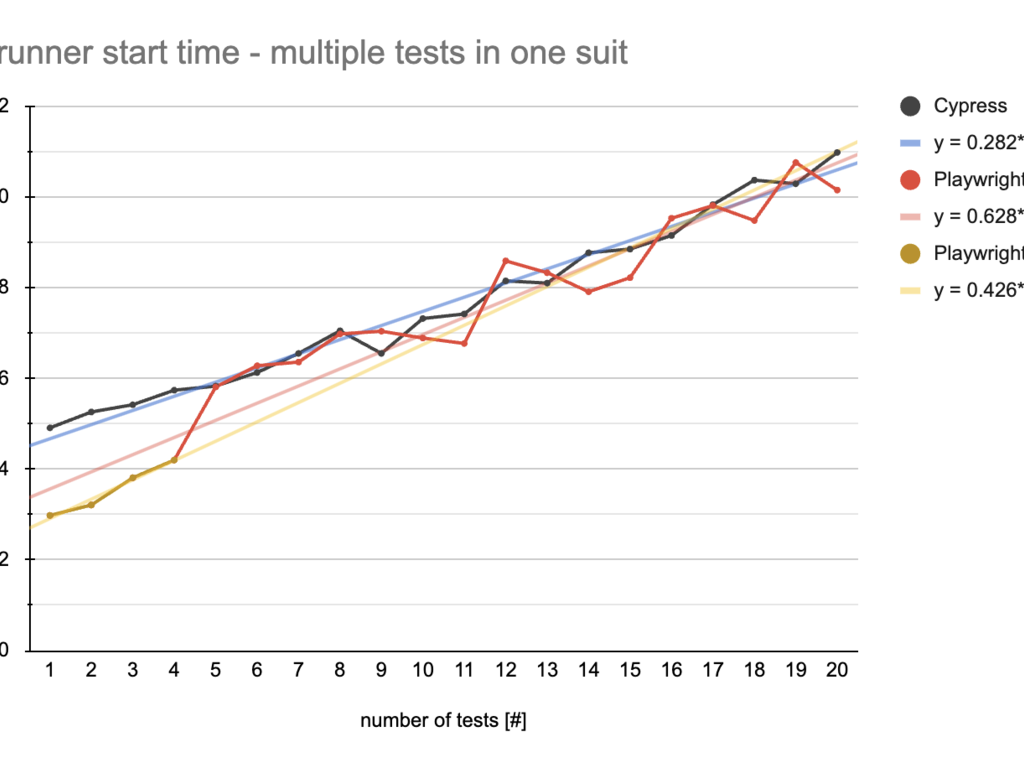

2. Test runner start time

This measurement aimed to estimate test suite startup time. We progressively increased the number of identical tests within a single suite from one to twenty. The suite's startup time was then determined by the y-intercept of the linear regression function.

The following commands were used for test execution: yarn cypress run – browser chrome – spec <path_to_cy_suit> for Cypress, and yarn playwright test <path_to_pw_suit> for Playwright.

The data reveals that Cypress has a start time of 4.88 seconds, nearly twice as long as Playwright's 2.91. While Playwright and Cypress have similar test file run times when there are more than three tests in a file, Playwright's execution time appears to increase after the fourth measurement point. This jump likely corresponds to the use of 4 workers for parallel test execution.

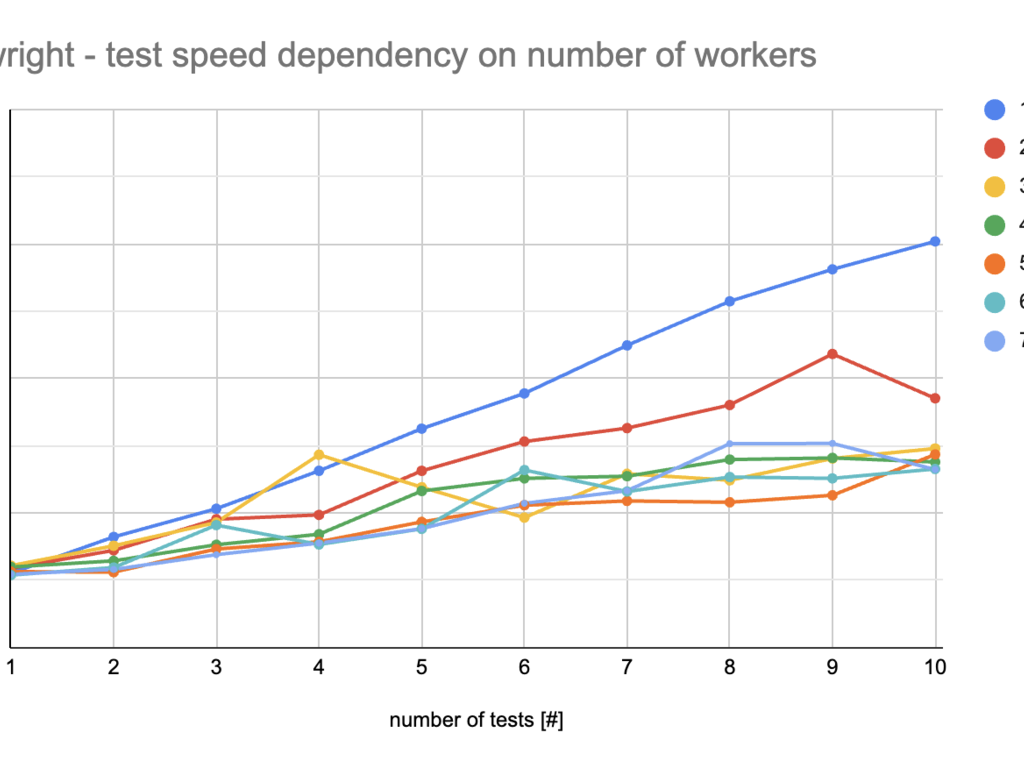

3. Playwright – Time dependency of the number of workers

The next measurement was focused on Playwright only and its time dependency on a number of tests in one test suite and a different number of workers. We increased the number of tests from 1 to 10. The page was loaded before each test. There is a used command for n workers: yarn playwright test playwright/suggester.spec.js – workers=<n>.

With fewer than four tests per file, the number of workers used makes little practical difference, especially considering measurement error. However, using only one worker significantly increases test execution time compared to using multiple workers. As observed in previous tests, a similar pattern emerges with higher worker counts. Therefore, an optimal range appears to be between four to six workers. From my perspective, I would recommend adjusting the number of workers right for your cases.

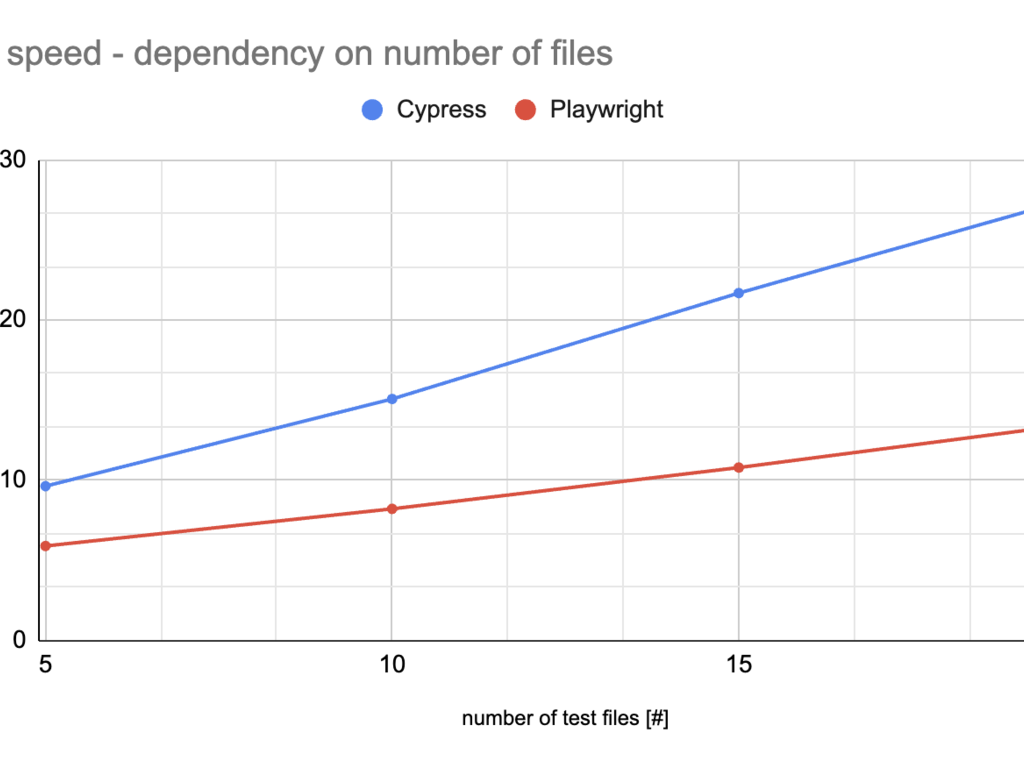

4. Test speed according to the number of test files

In the next part of the paper, we will move on to the comparison dependent on the number of test suites. We increased the number of test files by a step of 5, each containing the same test with the same scenario. To eliminate measurement error, we carried out measurements five times for every number of test files. The following commands were used for Cypress yarn cypress run – browser chrome and Playwright yarn playwright test with a default number of workers 4.

The test results demonstrate a significant time advantage for Playwright over Cypress. When the number of test files doubles, the Cypress test duration nearly doubles as well, consistent with the data from this paper: Comparing Test Execution Speed of Modern Test Automation Frameworks: Cypress vs. Playwright. Playwright's duration, however, increases at a much slower rate. This clearly illustrates Playwright's superior performance when running multiple tests within a project. The test speed might be further adjusted by increasing the number of workers in the case of Playwright.

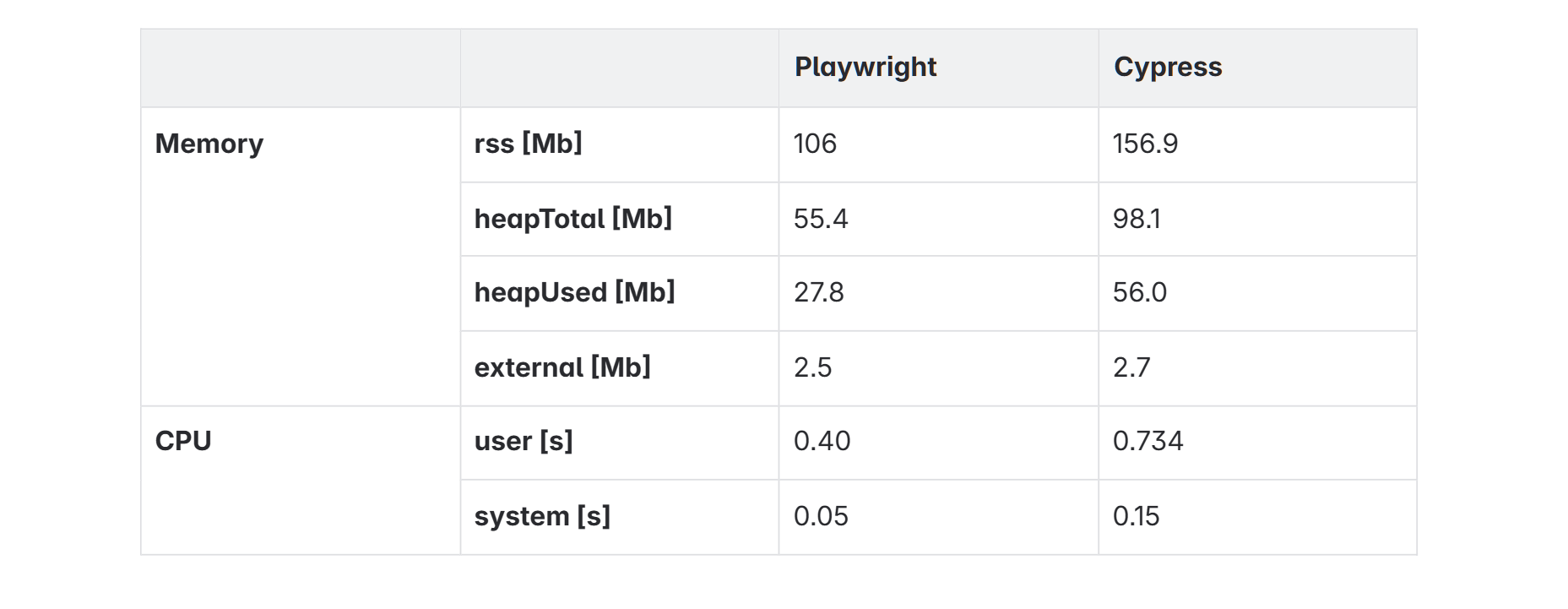

5. Resource estimation

The goal of that measurement was to find a way and compare the load and resources. Cypress provides an option to log memory and CPU usage using DEBUG variable, but it was not suitable for our measurement because we wouldn’t be able to compare it with Playwright, which does not offer the same debug tool. The data were measured using node metrics directly in the test file.

const memoryUsage = process.memoryUsage();const cpuUsage = process.cpuUsage();

In Playwright, it was possible to log those metrics directly from code, but for Cypress, we needed to configure node events in cypress.config.js.

setupNodeEvents(on) {

on('task', {

logMemoryUsage() {

const memoryUsage = process.memoryUsage();

console.log(`Memory Usage:`, memoryUsage);

return null;

},

logCPUUsage() {

const cpuUsage = process.cpuUsage();

console.log('CPU Usage:', cpuUsage);

return null;

},

});

}

We run only one test with those metrics, where those metrics were measured at the end of the test.

As the measurement was provided directly in the test, we are not able to quantify real memory and CPU usage for the whole test run. But the data clearly shows that Playwright has significantly lower usage of resources in comparison with Cypress.

Conclusion

In conclusion, our rigorous quantification of Cypress and Playwright for our front-end testing needs at Heureka reveals compelling insights. While Cypress has been a reliable and well-supported tool, our measurements consistently demonstrate Playwright's superior performance, particularly in terms of execution speed and resource utilization. Playwright exhibited significantly faster execution times for individual test scenarios and showed a much more scalable performance when the number of test files increased. Furthermore, Playwright demonstrated notably lower memory and CPU usage during test execution. These findings strongly suggest that transitioning to Playwright holds the potential to alleviate the bottleneck caused by our growing E2E test suites, leading to accelerated development cycles. While the optimal number of workers in Playwright should be fine-tuned based on specific project needs, the framework's native parallelization capabilities offer a clear advantage. Based on this comprehensive analysis, adopting Playwright appears to be a strategic move to enhance the efficiency and scalability of our front-end testing processes at Heureka.