Successful products powered by machine learning are tricky to develop. There is not one set of practices that fits most use cases. The ecosystem of tools is unstable. Technical debt is easy to produce. Deployment considerations have to be tackled early in the development cycle. Naturally, it is very easy to overspend on the effort without a return in sight.

Why does Heureka need Machine Learning?

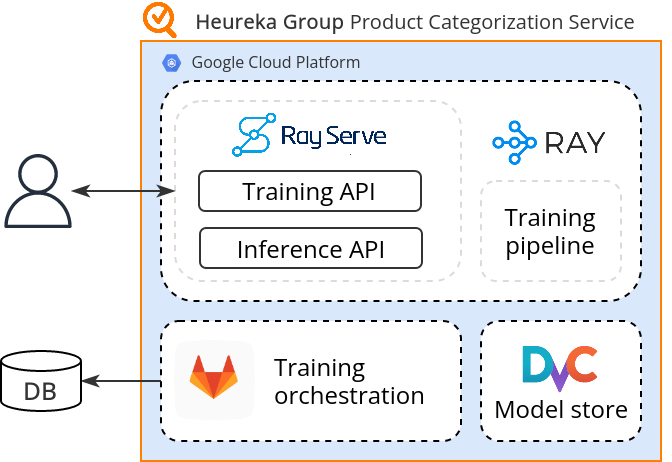

Products featured on our websites are sorted into categories. This business process, typically done by hand, is a natural fit for machine learning. With enough quality data at our disposal, our computer systems can be trained to automatically classify products by their textual description.

Let’s say we wanted to improve this process by doing the same with product images. We now have two algorithms working out the final classification. Predictive solutions involving multiple components are much more complex to manage than a single predictive component wrapped in a Flask app. This new dimension opens a whole range of scalability considerations that do not have trivial solutions.

Without the right architectural design, adding an extra classifier to the composite could only lead to more technical debt and force us into solving the mess by hiring more people. That does not sound too sustainable for a feature that has yet to fully prove its worth.

Looking for the right tool

The following list of requirements was developed as we learned the strengths and weaknesses of various deployment solutions.

- Data versioning must fit the GitOps philosophy.

- We want Python support because it is popular among data scientists.

- New components must be encapsulated and easy to integrate.

- System components must scale independently of each other.

- We want to use successful open source projects, to manage the risk of vendor lock-in.

It was important to us that whatever tooling we chose was aligned with our vision of systems development. On top of that, the solution had to be ready for the public cloud, because Heureka is transitioning away from on-premise infrastructure.

I came across Ray, a distributed processing framework from Anyscale. I was interested in hyperparameter tuning, but their serving library Ray Serve specifically turned out to be a great fit for most of our requirements.

Because of their distributed properties, these tools come with a learning curve, and Ray is no different. To suggest following their tutorial solved MLOps for us would be an overstatement. However, Ray's promise is a Python-first, scalable, and library-agnostic model serving solution.

Our road to adopting Ray

So how did we switch to Ray?

First, we ported our model over to FastAPI. FastAPI has many benefits over Flask, which I won't get into. This was a necessary step because Ray Serve has a native FastAPI integration.

app = FastAPI(title='Categorization Inference API') |

Next problem: How to scale up?

We knew Ray's core library was able to scale up and distribute workloads to thousands of nodes, but unfortunately, Ray Serve at the time did not come with autoscaling capabilities. We reached out to Anyscale through their Slack community. It turned out autoscaling was already on their roadmap. We tested it out when it was committed to the master branch. The API is still experimental but shows great promise.

@serve.deployment(name='text-model', version='v1', _autoscaling_config={ |

Our Google Kubernetes Engine production environment offers zero-to-N node autoscaling. To decrease cloud costs, it makes sense for expensive GPU nodes to be scaled down to zero during times of low demand. Unfortunately, the current semantics do not allow Ray Serve’s autoscaling to do that, however, we are talking to Anyscale to make that a reality as well.

If we happen to know the workload schedules, a quick solution would be to control the scaling through a web API:

@app.put('/model/{name}/deployment')

async def update_deployment(self, name: str, num_replicas: int, gpu: bool):

if num_replicas == 0:

TextModelBackend.options(name=name).delete()

else:

TextModelBackend.options(

name=name,

num_replicas=num_replicas,

ray_actor_options={'num_gpus': int(gpu)

}).deploy()Conclusions

Keeping cloud costs low is only one side of the coin. The code needs to stay maintainable, meaning we should be using as few tools, major libraries, and services as possible. That should simplify the onboarding of new people. No backend developer wishes to be dropped in the middle of a pipeline jungle, which is what many machine learning systems end up becoming.

We have good reasons to expand the adoption of Ray across Heureka by utilizing:

- Ray Tune - which is a hyperparameter search library that distributes the search schedule across multiple nodes.

- Ray’s MLflow integration - which will enable other teams to serve their logged models directly from MLflow.

We have also implemented a training pipeline using the core Ray library to have both training and inference under one roof. Other teams can expect this offering to be available to them early next year.

Full disclosure: Anyscale’s Ray Serve team provided input prior to publication. A sincere thank you to them and Heureka’s Tech Community team.